|

I am a Machine Learning Researcher at Sony Interactive Entertainment (PlayStation). I am also a Ph.D. candidate in Computer Science at the Computer Graphics and Vision Lab at Portland State University, where I'm fortunate to be advised by Dr. Feng Liu. My research interests lie broadly in computational visual perception, generative AI, novel view synthesis, machine learning safety, adversarial robustness, robotics, and precision agriculture. During the summer of 2023, I worked as an Applied Scientist Intern on Amazon’s Imaging Science team. Afterwards, in the fall of 2024 and the winter of 2025, I was on a co-op as a Machine Learning Researcher at Sony Interactive Entertainment (PlayStation). Want to know more or connect? Check out these links: |

|

|

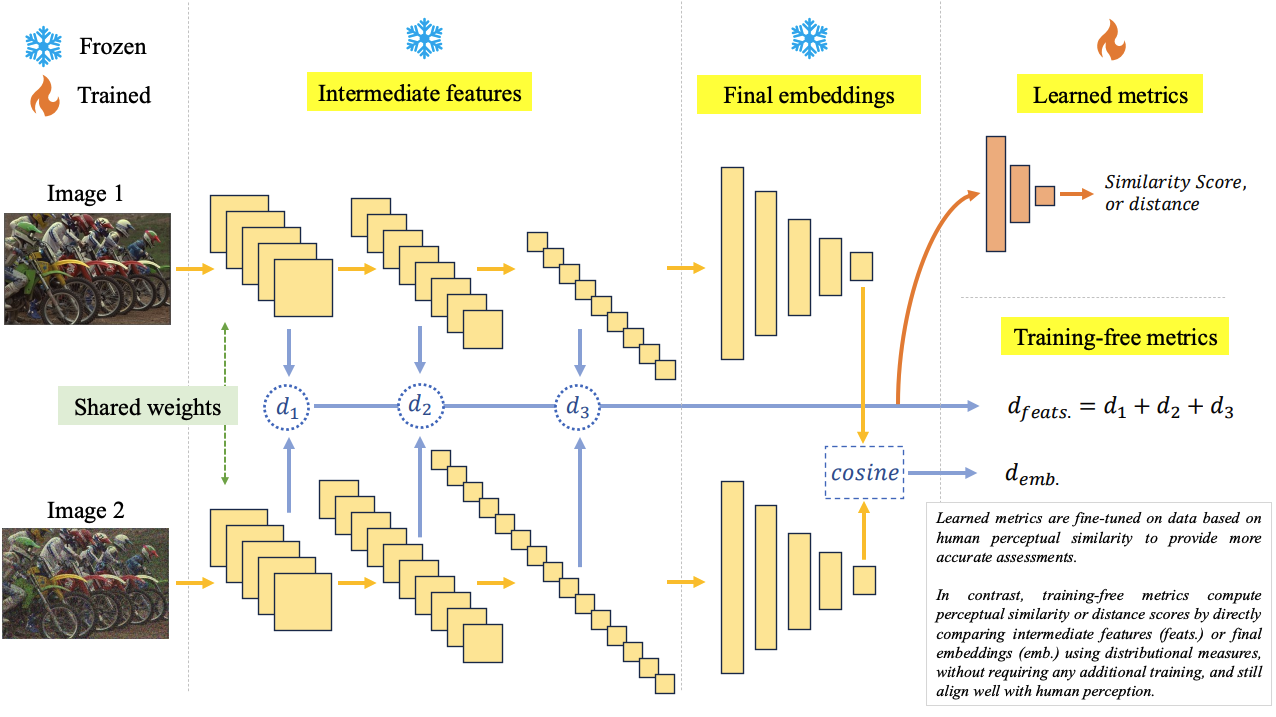

Abhijay Ghildyal, Nabajeet Barman, Saman Zadtootaghaj IEEE International Conference on Acoustics, Speech, and Signal Processing, 2025 arXiv / code / bibtex Previous perceptual similarity models using foundation models focus on the final layer or embedding. In contrast, this work investigates the use of intermediate features, which remain largely unexplored in low-level perceptual similarity metrics. We show that intermediate features are more effective and, by applying feature distance measures without requiring training (zero-shot), can outperform existing metrics. |

|

|

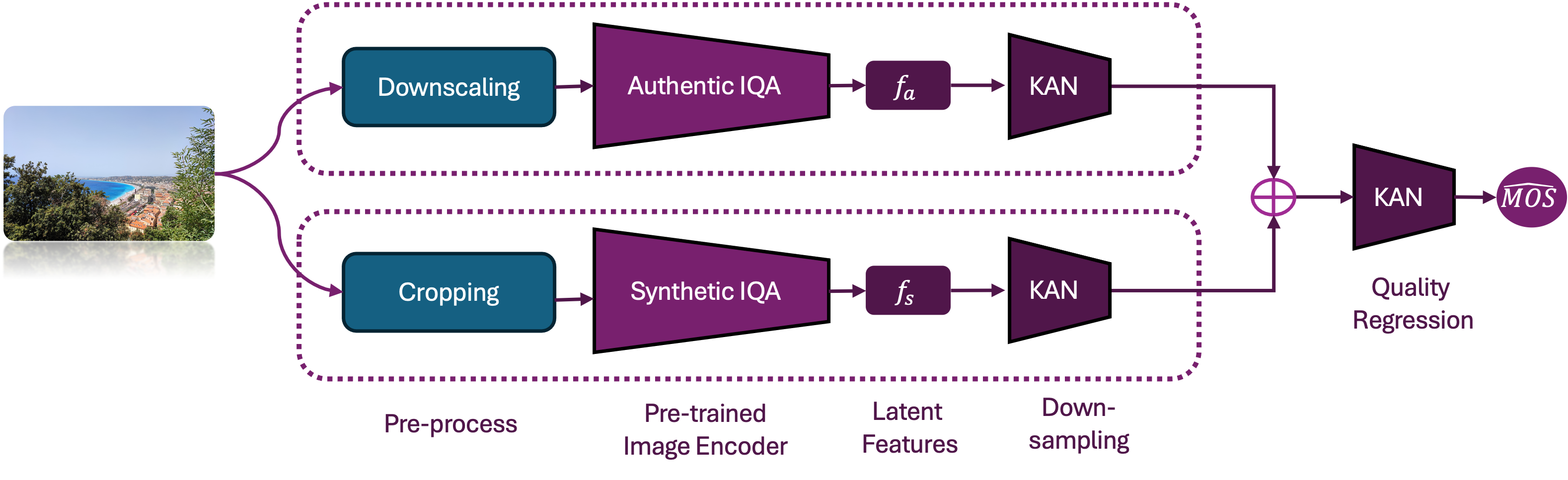

Nasim J. Avanaki, Abhijay Ghildyal, Nabajeet Barman, Saman Zadtootaghaj Advances in Image Manipulation Workshop at European Conference on Computer Vision (ECCV), 2024 arXiv / code / bibtex We developed a lightweight No-Reference Image Quality Assessment (NR-IQA) model. It uses a dual-branch architecture, with one branch trained on synthetically distorted images and the other on authentically distorted images, improving generalizability across distortion types. It is a compact, lightweight NR-IQA model that achieves SOTA performance on ECCV AIM UHD-IQA challenge validation and test datasets while being nearly 5.7 times faster than the fastest SOTA model. |

|

|

Abhijay Ghildyal, Feng Liu Transactions on Machine Learning Research (TMLR), 2023 Featured Certification (Spotlight 🌟 or top ~0.01% of the accepted papers) arXiv / code / OpenReview / bibtex In this study, we systematically examine the robustness of both traditional and learned perceptual similarity metrics to imperceptible adversarial perturbations. |

|

|

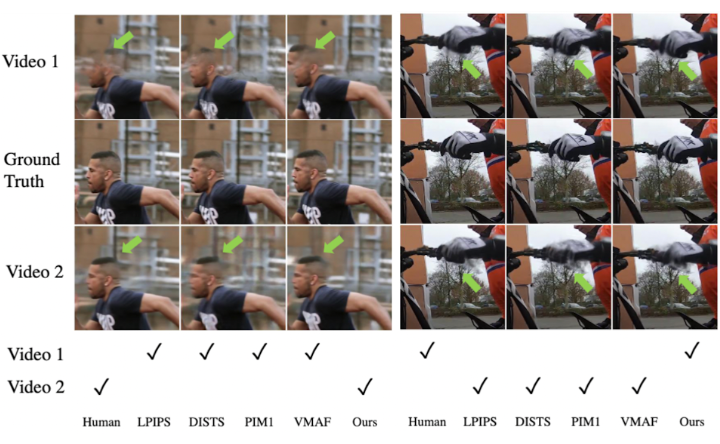

Qiqi Hou, Abhijay Ghildyal, Feng Liu European Conference on Computer Vision (ECCV), 2022 arXiv / code / video / bibtex We developed a perceptual quality metric for measuring video frame interpolation results. Our method learns perceptual features directly from videos instead of individual frames. |

|

|

Abhijay Ghildyal, Feng Liu European Conference on Computer Vision (ECCV), 2022 arXiv / code / video / IQA-PyTorch / bibtex We investigated a broad range of neural network elements and developed a robust perceptual similarity metric. Our shift-tolerant perceptual similarity metric (ST-LPIPS) is consistent with human perception and is less susceptible to imperceptible misalignments between two images than existing metrics. |

|

|

|

|

|

Design and source code from Jon Barron's website. |